| |

Electronics Control

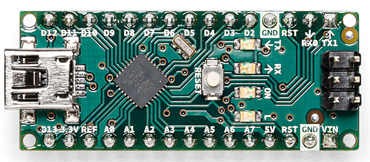

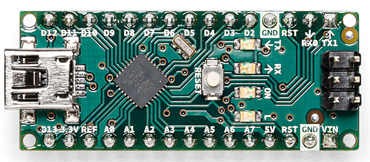

I will be using custom arduino boards - deadbug style bare bones atmega2560 microcontrollers. So basically, I will

have a main pc - a mini ITX custom gaming pc to be exact - which will be mounted inside the chest of the

robot. Then I will have this pc hooked up by USB to a custom arduino mega barebones deadbug microcontroller.

This will then interface with a network of other custom arduino mega microcontrollers which will in turn

interface with the various sensors and foc motor control mosfets to give me a total of around 300+ servo motors

I can control - which should be

enough for the whole bot's muscular system to operate. In order to connect by USB to all

Arduino boards, I will use a USB connection to one arduino who will daisy chain to the other arduinos

and messages will address arduinos by name, each arduino will be assigned a name and respond to commands

addressing it by name. So that single USB port on the PC will

be able to fork vastly to send signals to each of the different arduino servo controller boards.

The main brains gaming PC will do all the calculations of where all the different servos need to be and it will

tell them where to go. The servo motor controllers will be controlled by the Arduinos and

the Arduinos will also update the main brains PC with their progress and any sensor input they are

reading in on their input pins. The PC will also connect by USB to a series of Arduino boards which will interface with strain

gauge sensors, hall effect sensors (or potentiometers), current sensors, etc that will be placed throughout the robot's body

which will be able to detect when it is touching something, how much strain is being put on its muscle fibers,

etc. Using this information, it can gauge its balance, the weight of objects it is grabbing, whether it is bumping into something

or tripping over something, etc. The same PC will also connect by USB to webcam eyeballs (ELP USB camera

1080p 2 megapixel, wide angle). This will enable the PC to use computer vision to detect objects and

interpret its surroundings. This vision system will enable the robot to detect distances to its surrounding

walls and objects, which will provide context and assistance to his depth perception capabilities.

|

|